Here We Go!

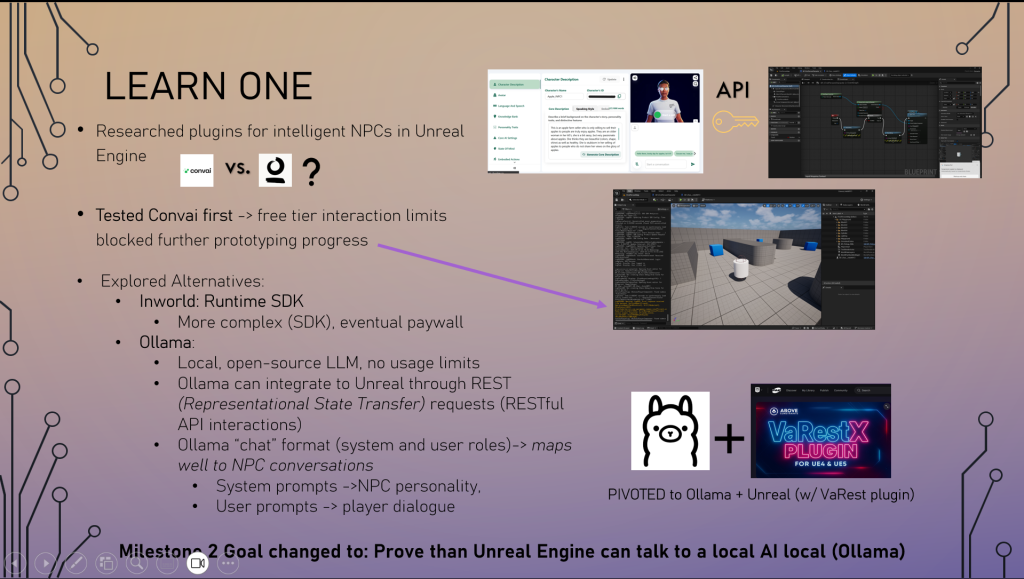

Picking up from last week, there are no direct step-by-step guides to this “intelligent NPC” thing, not a lot of documentation, in fact my plan to use Ollama + Unreal Engine seemed to be nearly impossible…

However, in the name of experimentation…I dove right in!

Progress (What DID I Do?)

Finding Square One

To start, I learned that Ollama (a local open-source LLM) operated by hosting a tiny web server on my machine at:

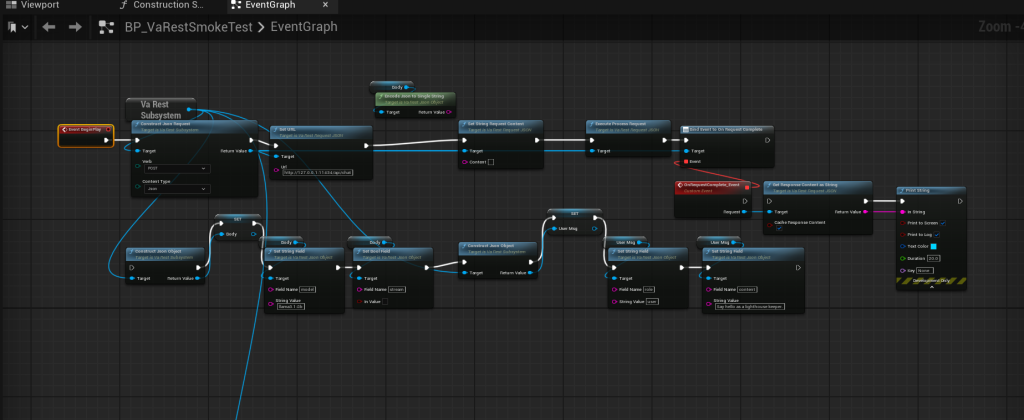

http://127.0.0.1:11434/api/chat OR http://localhost:11434/api/chatI learned that, Ollama could only communicate over JSON through HTTP requests.

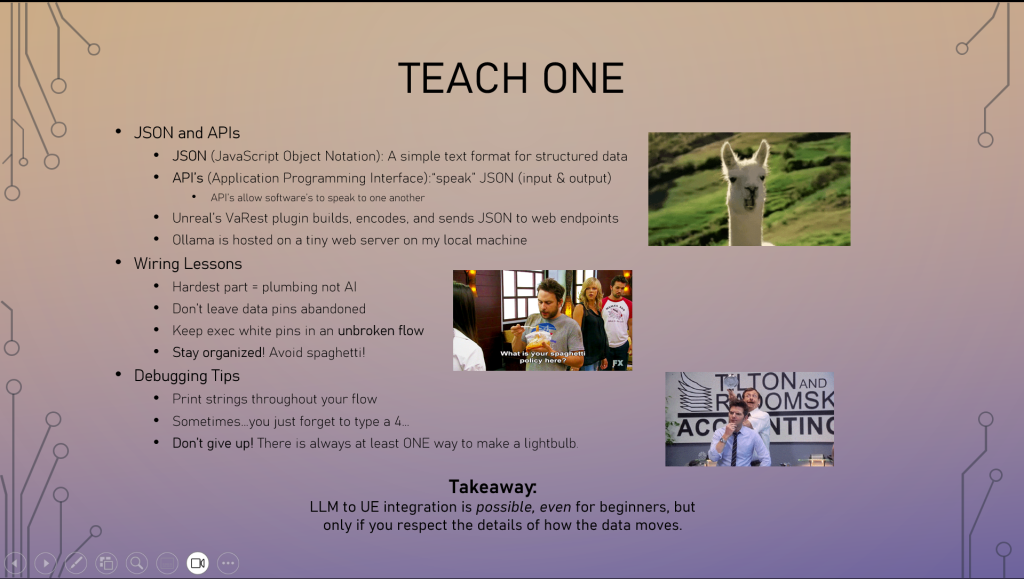

A JSON (JavaScript Object Notation) is a simple text format for structured data. This "lifeblood" of modern technology is how most modern APIs exchange data, as it is easy for both humans and computers to understand.

An API (Application Programming Interface) is a set of defined rules that allow software's to communicate with each other (ususally with JSON).

An API is a RESTful Endpoint (REST = Representational State Transfer: the standard architecture web services are typically designed from. Endpoint= the web address web request are sent to).

I also learned from the Ollama documentation that:

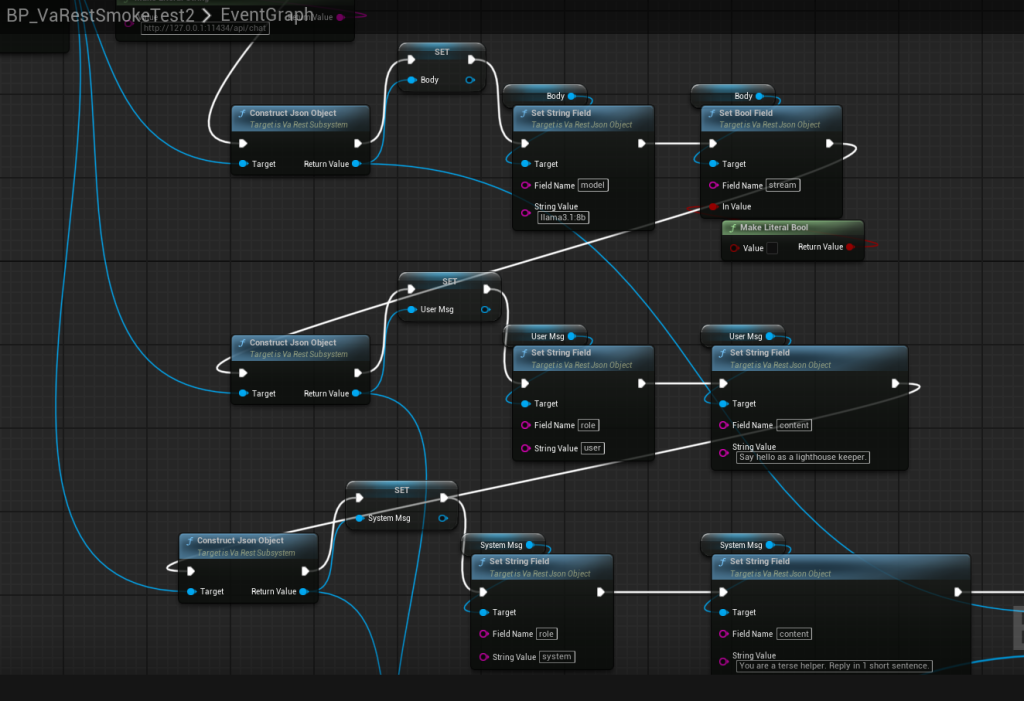

The Ollama “chat” format (system and user roles) seemed to match well to typical in-game NPC conversations because it uses system and user roles:

System prompts = NPC personality

User prompts = Player dialogue

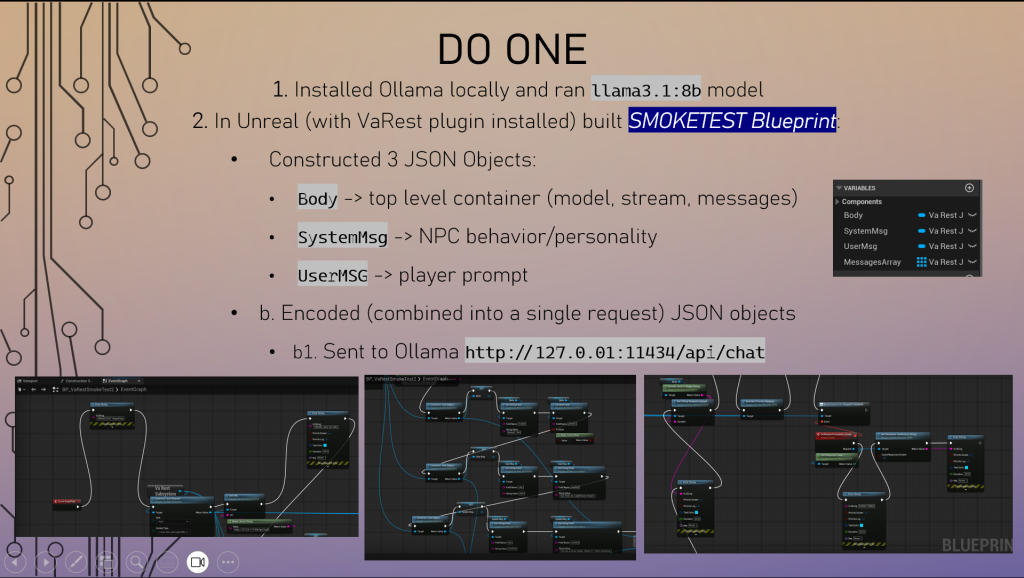

Ollama required “model”, “messages”, and “stream”

"stream" = a true (Ollama sends reply back in pieces)/ false (Ollama waits until full reply is ready and sends back all at once)

"model" = tells which specific model for Ollama to use (there are a few)

"messages" = an array of message objects with "roles" ("system" or "user") and "content" (text inside message object)

So, after all this learning, I figured that in order for Unreal to communicate with Ollama I needed to do something in this order:

Formula for Unreal ↔ Ollama Communication

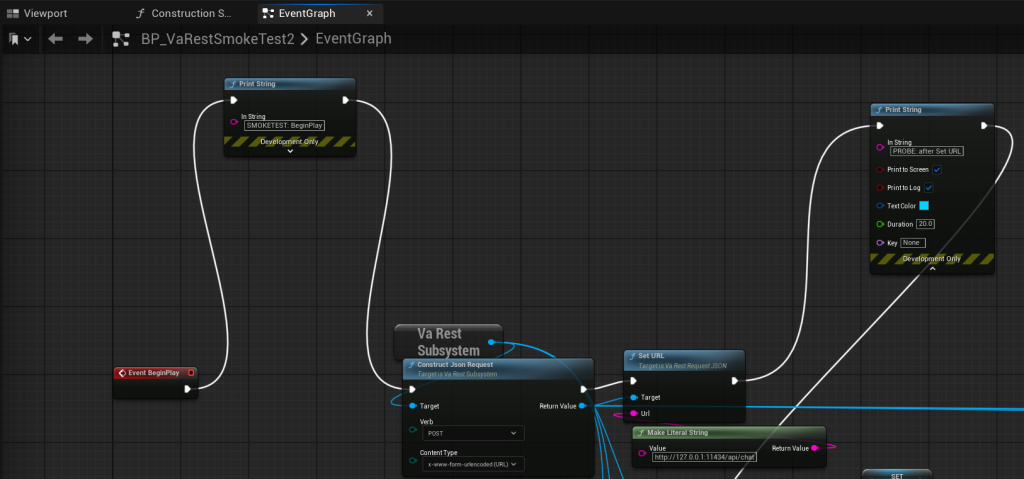

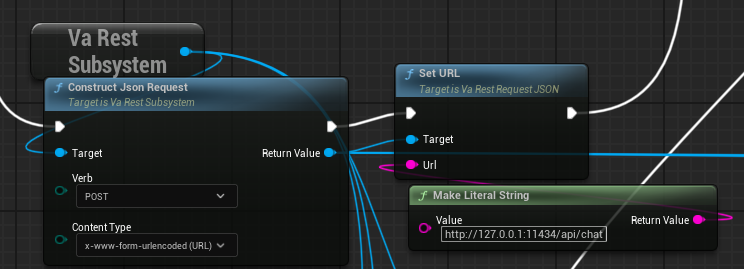

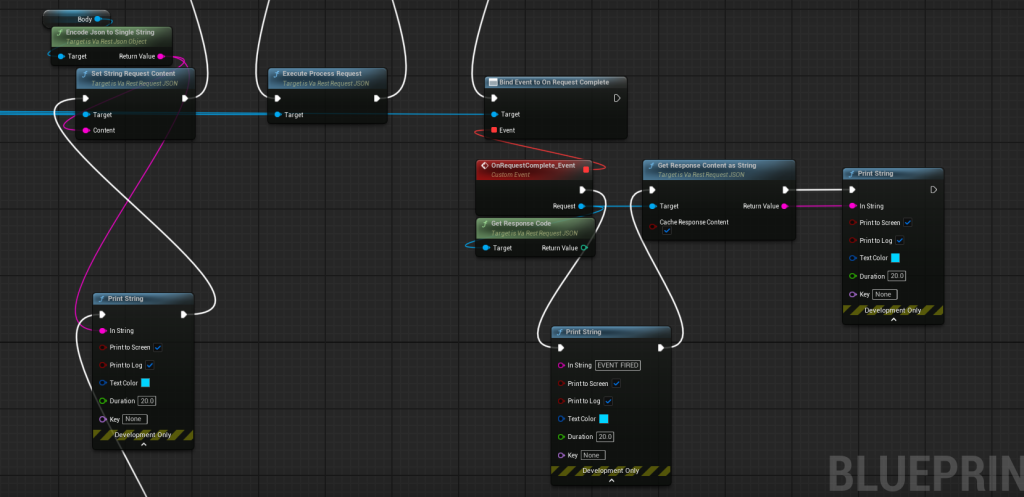

Unreal (Blueprints) + VaRest plugin → Builds JSON request → Sent via HTTP POST → to Ollama’s REST API endpoint → Ollama replies in JSON → Unreal displays NPC response.

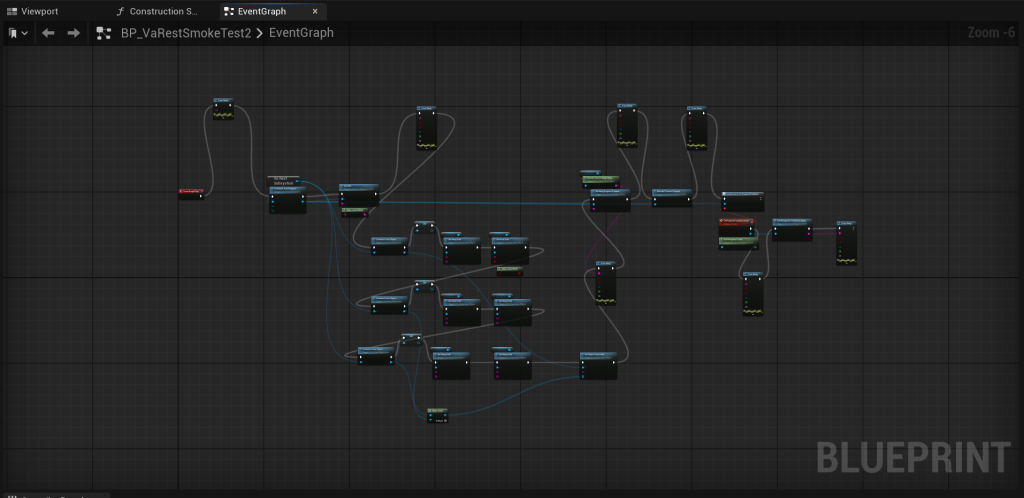

Wires Upon Wires

Here are the summarized steps I took in wiring up my Blueprint:

It’s not…working?

Thrilled at my Blueprint, I hit PLAY to test it…and it did not work.

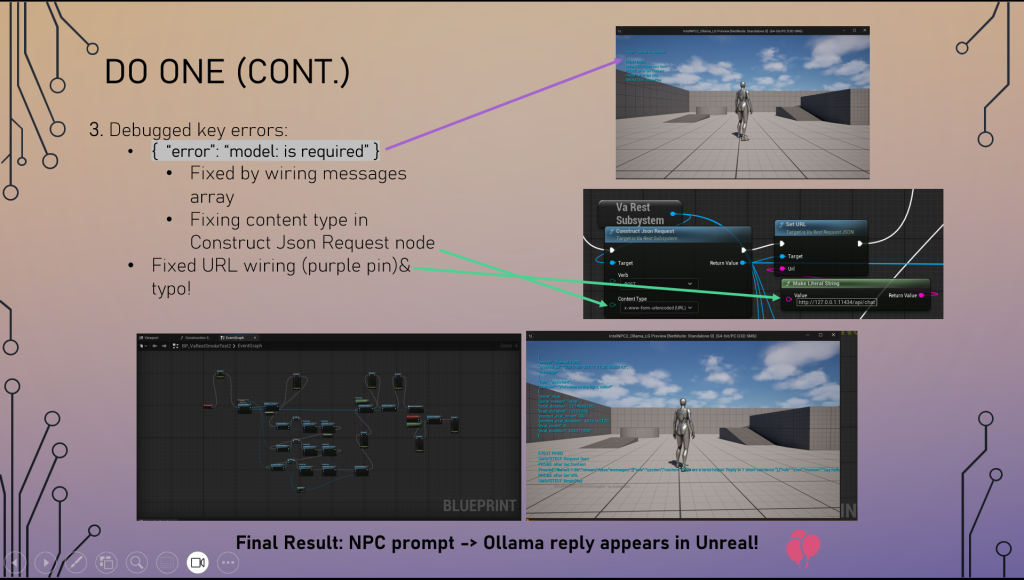

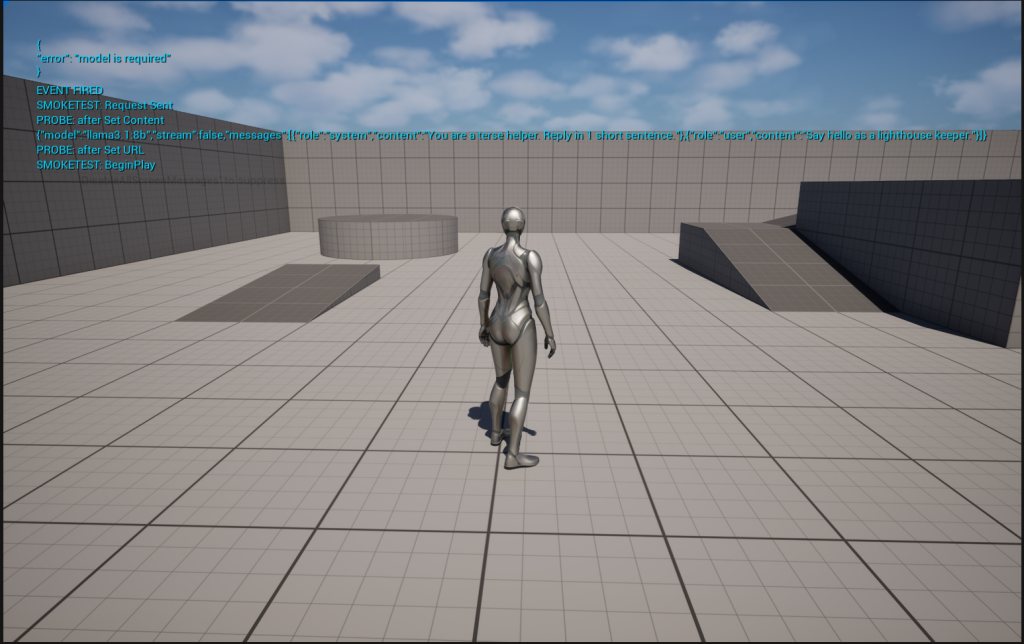

I kept getting an error that said: { “model is required” }

I spent a few days and many hours hacking and slashing my way through a troubleshooting process to find what was wrong.

Not quite sure WHY this was happening, I tried a few different things to determine the issue:

Debugging Process:

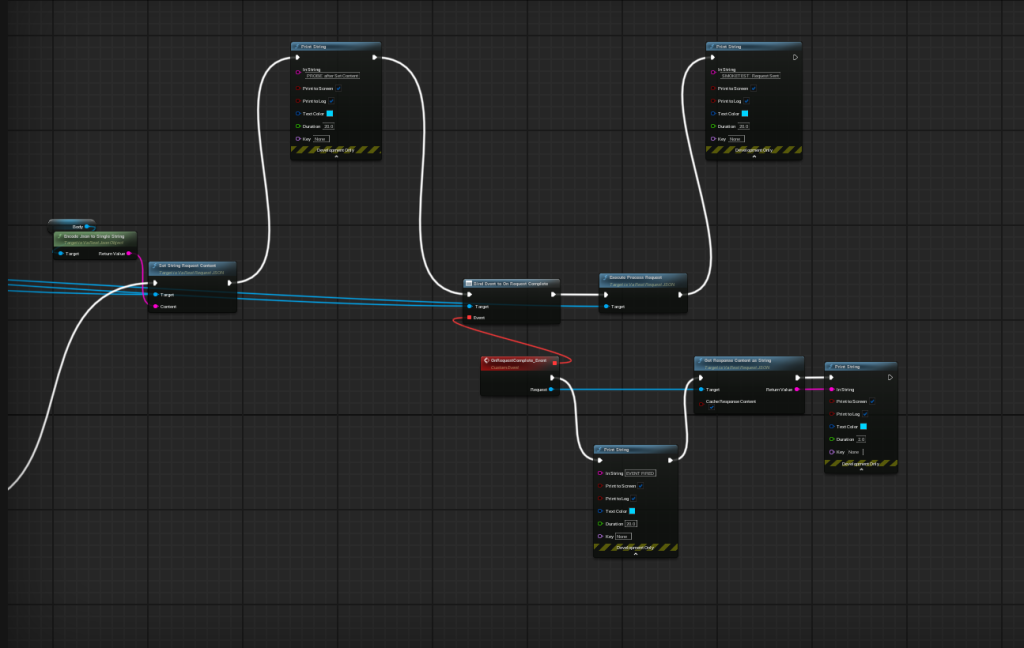

- Double-checked the model tag against

ollama list. - Verified the URL wiring: I realized one node had a typo (missing a number 4 in the URL value box), so the request wasn’t reaching

http://localhost:11434/api/chatproperly. - Rebuilt the messages array as an object array according to VaREST documentation (system + user messages bundled together).

- Ensured

streamwas sent as a bool, instead of string.

Breakthrough!

The fix came as a complete surprise from setting the content type correctly (application/json TO x-www-form-urlencode(URL),

I think this changed the encoded JSON body into the same request object I executed, and corrected the URL endpoint.

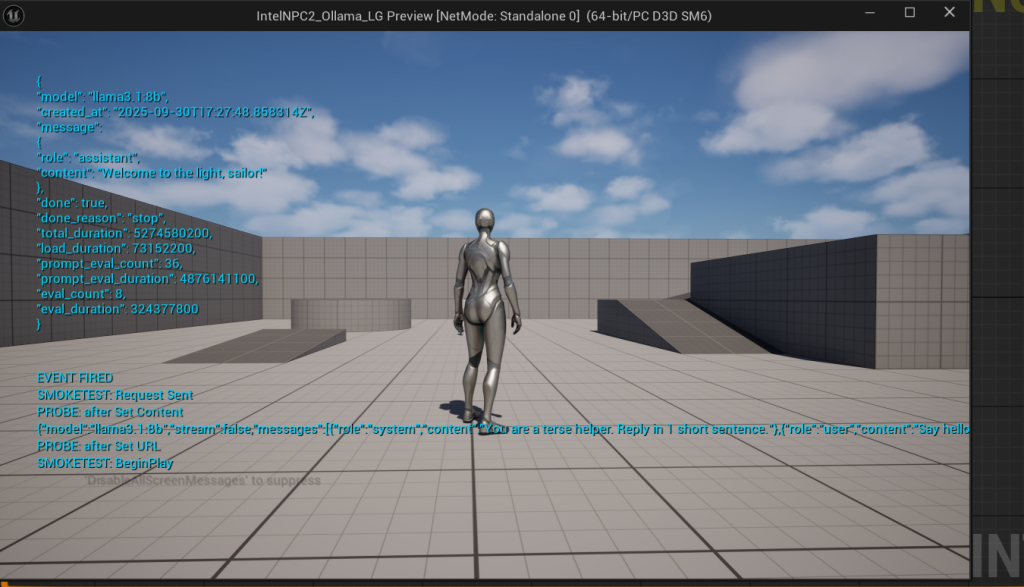

Once I swapped that and hit compile, the error vanished and I finally got the first NPC reply in Unreal!!!

End of Milestone 2!

I presented my Milestone 2 work to my peers on Wednesday, October 1st, 2025.

Next is Milestone 3 (which has changed) where I hope to:

Take the working Unreal Ollama loop from Milestone 2 and make the responses usable inside the game.

This includes cleaning up my Blueprint, the display (basic UI dialogue boxes), and some light touch NPC personality sculpting. I also hope to ensure it keeps working.

Until next time,

~Lauren