Decisions…

In my last post, I closed by proclaiming my intent to let all I had learned during Milestone 1 churn in my head to determine what I should do next.

There were many weighty questions that basically boiled down to:

"Which plugin? Which engine?"

"Which type of gen AI entity? Which model?"

"How the heck do I actually do this?"

After thinking I realized that the best time to plant a tree was yesterday.

So, I decided to just begin. Try to make an intelligent NPC. Pick a plugin, an engine, and see where it took me. If it didn’t work, try another one.

Ready, Set, Go!

In terms of picking a plugin, I looked into a few choices from Milestone 1’s research:

Inworld (First Look)

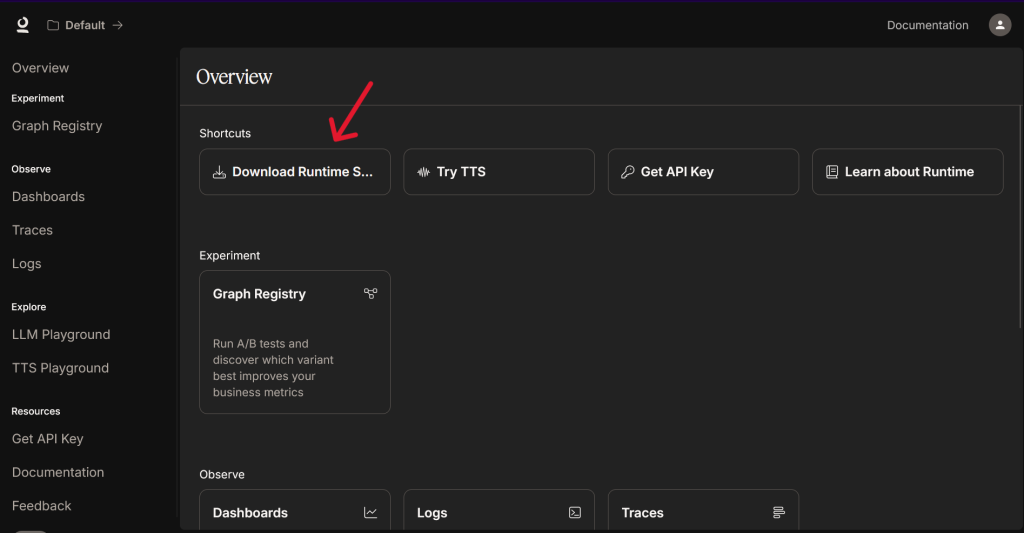

After some digging, I realized things seemed… off.

- Their plugins were not updated, and specifically the Unreal Engine plugin had not been migrated to the new Epic Games asset store (FAB).

- My dashboard didn’t match their blog posts or tutorials. I kept finding broken links as well as no existence of a “character creator”.

At this point, itching to get started, I chose to pivot to Convai, since their information was current and their Unreal plugin was easy to access.

Convai Phase Steps

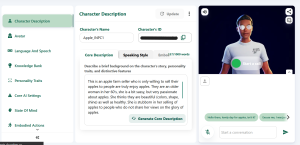

- Created a character on the Convai character dashboard

- Apple_INPC1 the “Apple Seller”

- Installed and set up Convai’s Unreal Engine plugin.

- Linked my Convai API key to UE project

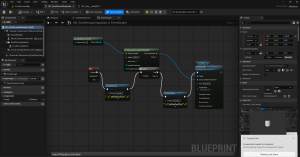

- Followed Convai Blueprint documentation.

- Matched the node setup

- Confirmed the system could connect.

- IT’S WORKING!!!

End Result of Convai Phase

Technically…I got the NPC to work when I hit play!

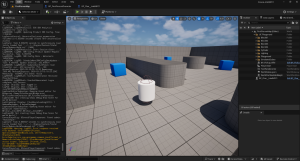

I (the player) walked up to the NPC (a cylinder in the scene) ->

Pressed E (while looking at it) to simulate a prompt interaction ->

The NPC acknowledged that I spoke to it ->

NPC connected to the Convai server through my API key as well as the character ID ->

Gave me a response back (in line with its apple seller character I created) via an on screen prompt!

The problem? Within minutes I hit the free-tier interaction cap (100/month). Convai technically worked, but I couldn’t meaningfully keep testing without paying.

Time to Pivot: The “I-Will-Make-My-Own-Plan” Phase

Even if I upgraded, Convai would still have the issue of limited interactions.

I needed a solution that would (ideally) not cost me money to utilize generative AI models as well as not limit the amount of interactions I could have.

So, I circled back to Inworld to see if I’d missed something.

Where on Earth was that dashboard character engine?

It turned out, Inworld seemed to be in the middle of a major shift!

They were still offering the same idea of a product but instead of offering it through a web dashboard, they were transferring to what they called Runtime SDK.

Inworld Runtime SDK Explained

- Old Inworld (Web Dashboard / Studio):

- Visual character creator in the browser.

- Easy, no coding, but limited integration.

- Now (Runtime SDK):

- Direct integration into Unreal or Unity.

- Work through code, configs, and templates to define your characters.

- More flexibility and control, but also more technical; writing logic rather than filling out a form.

- Offers $10/month of free credits. (More generous than Convai, but still not unlimited.)

This pivot made sense for Inworld’s long-term direction, but for my project it meant higher learning curve and likely costs down the road.

The Hybrid Solution

I then wondered if there was any way to run a local LLM on my machine and bypass server/interaction limits?

Enter Ollama

Ollama is an open-source tool that runs large language models locally on a machine.

It gave me a free, uncapped option to test whether Unreal could talk to an AI model at all!

The tradeoff: Ollama alone doesn’t offer built-in personality tools. That’s why I decided on a hybrid approach: use Ollama for the raw generative responses now, and later, layer in the Inworld Runtime SDK to shape personality, emotion, and guardrails directly inside Unreal.

Current Status & Milestone 2 Update

Milestone 2 is In-Progress!

I have pivoted from Convai to my hybrid Ollama + Inworld Runtime SDK plan.

As of today, Unreal is already talking to Ollama (the request/response event fires)!

Next hurdle: fixing a JSON formatting error so I can see my first real NPC reply.

My new method is slower going without a step-by-step guide, but I’m learning as I debug my Blueprints.

Milestone 2 Goal Update

The updated goal for this milestone is simple: prove the full (local) Ollama request/response loop works inside Unreal.

This is my essential “smoke test.” I even have a Blueprint literally called SmokeTest!

I will go over the exact process step by step that I have taken for this updated Milestone Ollama plan in Post 4 next week.

Until next time,

~Lauren